In this chapter you will learn about how to start a simulation and how to use the IPSL models and tools

Once you have defined and setup your simulation you can submit it. The run scripts are:

curie > ccc_msub Job_MYJOBNAME ada > llsubmit Job_MYJOBNAME

These scripts return a job number that can be used with the machine specificities to manage your job. Please refer to the environment page of your machine.

Before starting a simulation it is very important to double check that it was properly setup. We strongly encourage you to perform a short test before starting a long simulation.

The job you just submitted is the first element of a chain of jobs, depending on the kind of jobs you submitted. These jobs include the production job itself and post processing jobs like: rebuild, pack, create_ts, create_se, monitoring and atlas which are started at given frequencies.

Post processing jobs will be automatically started at the end of the simulation.

If you recompile the modele during a simulation

A run.card file is created as soon as your simulation starts. It contains information about your simulation, in particular the PeriodState parameter which is:

From : no-reply.tgcc@cea.fr Object : CURFEV13 completed Dear Jessica, Simulation CURFEV13 is completed on supercomputer curie3820. Job started : 20000101 Job ended : 20001231 Output files are available in /ccc/store/.../IGCM_OUT/IPSLCM5A/DEVT/pdControl/CURFEV13 Files to be rebuild are temporarily available in /ccc/scratch/.../IGCM_OUT/IPSLCM5A/DEVT/pdControl/CURFEV13/REBUILD Pre-packed files are temporarily available in /ccc/scratch/.../IGCM_OUT/IPSLCM5A/DEVT/pdControl/CURFEV13 Script files, Script Outputs and Debug files (if necessary) are available in /ccc/work/.../modipsl/config/IPSLCM5_v5/CURFEV13

From : no-reply.tgcc@cea.fr Object : CURFEV13 failed Dear Jessica, Simulation CURFEV13 is failed on supercomputer curie3424. Job started : 20000101 Job ended : 20001231 Output files are available in /ccc/store/.../IGCM_OUT/IPSLCM5A/DEVT/pdControl/CURFEV13 Files to be rebuild are temporarily available in /ccc/scratch/.../IGCM_OUT/IPSLCM5A/DEVT/pdControl/CURFEV13/REBUILD Pre-packed files are temporarily available in /ccc/scratch/.../IGCM_OUT/IPSLCM5A/DEVT/pdControl/CURFEV13 Script files, Script Outputs and Debug files (if necessary) are available in /ccc/work/.../modipsl/config/IPSLCM5_v5/CURFEV13

cd $SUBMIT_DIR (ie modipsl/config/LMDZOR_v5/DIADEME) cp ../../../libIGCM/clean_month.job . ; chmod 755 clean_month.job # Once and for all ./clean_month.job # Answer to the questions same for clean_year.job ccc_msub Job_EXP00 or llsubmit Job_EXP00

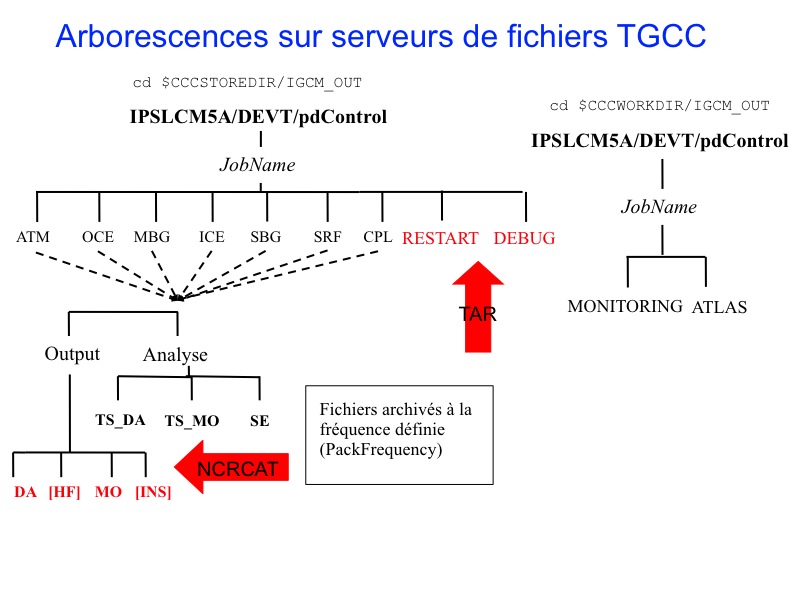

The output files are stored on file servers. Their name follows a standardized nomenclature: IGCM_OUT/TagName/[SpaceName]/[ExperimentName]/JobName/ in different subdirectories for each "Output" and "Analyse" component (e.g. ATM/Output, ATM/Analyse), DEBUG, RESTART, ATLAS and MONITORING.

Prior to the packs execution, this directory structure is stored

After the packs execution (see diagram below), this tree is stored

At the end of your simulation, the PeriodState parameter of the run.card files indicates if the simulation has been completed or was aborted due to a Fatal error.

This files contains the following sections :

[Configuration] #last PREFIX OldPrefix= # ---> prefix of the last created files during the simulation = JobName + date of the last period. Used for the Restart #Compute date of loop PeriodDateBegin= # --->start date of the next period to be simulated PeriodDateEnd= # ---> end date of the next period to be simulated CumulPeriod= # ---> number of already simulated periods # State of Job "Start", "Running", "OnQueue", "Completed" PeriodState="Completed" SubmitPath= # ---> Submission directory

[PostProcessing] TimeSeriesRunning=n # ---> indicates if the timeSeries are running TimeSeriesCompleted=20091231 # ---> indicates the date of the last TimeSerie produced by the post processing

[Log] # Executables Size LastExeSize=() #--------------------------------- # CumulPeriod | PeriodDateBegin | PeriodDateEnd | RunDateBegin | RunDateEnd | RealCpuTime | UserCpuTime | SysCpuTime | ExeDate # 1 | 20000101 | 20000131 | 2013-02-15T16:14:15 | 2013-02-15T16:27:34 | 798.33000 | 0.37000 | 3.05000 | ATM_Feb_15_16:13-OCE_Feb_15_15:56-CPL_Feb_15_15:43 # 2 | 20000201 | 20000228 | 2013-02-15T16:27:46 | 2013-02-15T16:39:44 | 718.16000 | 0.36000 | 3.39000 | ATM_Feb_15_16:13-OCE_Feb_15_15:56-CPL_Feb_15_15:43

A Script_Output_JobName file is created for each job executed. It contains the simulation job output log (list of the executed scripts, management of the I/O scripts).

This file contains three parts :

These three parts are defined as below :

####################################### # ANOTHER GREAT SIMULATION # ####################################### 1st part (copying the input files) ####################################### # DIR BEFORE RUN EXECUTION # ####################################### 2nd part (running the model) ####################################### # DIR AFTER RUN EXECUTION # ####################################### 3rd part (post processing)

If the run.card file indicates a problem at the end of the simulation, you can check your Script_Output file for more details. See more details here.

A Debug/ directory is created if the simulation crashed. This directory will contain text files from each of the model components to help you finding reasons for the crash. See also the chapter on monitoring and debugging.

The job automatically runs post processing jobs during the simulation and at different frequencies. There are two kinds of post processing: required post processing such as rebuild and pack, and optional post processing such as TimeSeries and seasonal means.

Here is a diagram describing the job sequence.

You must specify in config.card the kind and frequency of the post processing.

#======================================================================== #D-- Post - [Post] #D- Do we rebuild parallel output, this flag determines #D- frequency of rebuild submission (use NONE for DRYRUN=3) RebuildFrequency=1Y #D- frequency of pack post-treatment : DEBUG, RESTART, Output PackFrequency=1Y #D- Do we rebuild parallel output from archive (use NONE to use SCRATCHDIR as buffer) RebuildFromArchive=NONE #D- If you want to produce time series, this flag determines #D- frequency of post-processing submission (NONE if you don't want) TimeSeriesFrequency=10Y #D- If you want to produce seasonal average, this flag determines #D- the period of this average (NONE if you don't want) SeasonalFrequency=10Y #D- Offset for seasonal average first start dates ; same unit as SeasonalFrequency #D- Usefull if you do not want to consider the first X simulation's years SeasonalFrequencyOffset=0 #========================================================================

If no post processing is desired you must specify NONE for the TimeSeriesFrequency and SeasonalFrequency frequencies.

Note: if JobType=DEV \ the RebuildFrequency parameter is forced to be the PeriodLength value and one rebuild job per simulated period is started. Discouraged for long simulations.

The model outputs are concatenated before being stored on file servers. The concatenation frequency is set by the PackFrequency parameter. If this parameter is not set the rebuild frequency RebuildFrequency is used.

This packing step is performed by the PACKRESTART, PACKDEBUG jobs (started by the main job) and PACKOUTPUT (started by the Rebuild job).

All files listed below are archived or concatenated at the same frequency (PackFrequency)

A Time Series is a file which contains a single variable over the whole simulation period (!ChunckJob2D = NONE) or for a shorter period for 2D (!ChunckJob2D = 100Y) or 3D (!ChunckJob3D = 50Y) variables.

Example for lmdz :

45 [OutputFiles]

46 List= (histmth.nc, ${R_OUT_ATM_O_M}/${PREFIX}_1M_histmth.nc, Post_1M_histmth), \

...

53 [Post_1M_histmth]

54 Patches= (Patch_20091030_histcom_time_axis)

55 GatherWithInternal = (lon, lat, presnivs, time_counter, aire)

56 TimeSeriesVars2D = (bils, cldh, ... )

57 ChunckJob2D = NONE

58 TimeSeriesVars3D = ()

59 ChunckJob3D = NONE

The Time Series coming from monthly (or daily) output files are stored on the file server in the IGCM_OUT/TagName/[SpaceName]/[ExperimentName]/JobName/Composante/Analyse/TS_MO and TS_DA directories.

You can add or remove variables to the TimeSeries lists according to your needs.

There are as many time series jobs as there are !ChunckJob3D values. This can result in a number of create_ts jobs (automatically started by the computing sequence).

You can add or change the variables to be monitored by editing the configuration files of the monitoring. Those files are defined by default for each component.

The monitoring is defined here: ~compte_commun/atlas For example for LMDZ : monitoring01_lmdz_LMD9695.cfg

You can change the monitoring by creating a POST directory which is part of your configuration. Copy a .cfg file and change it the way you want. You will find two examples in special post processing

Be careful : to calculate a variable from two variables you must define it within parenthesis :

#----------------------------------------------------------------------------------------------------------------- # field | files patterns | files additionnal | operations | title | units | calcul of area #----------------------------------------------------------------------------------------------------------------- nettop_global | "tops topl" | LMDZ4.0_9695_grid.nc | "(tops[d=1]-topl[d=2])" | "TOA. total heat flux (GLOBAL)" | "W/m^2" | "aire[d=3]"

The files produced by ATLAS, MONITORING, time series and seasonal means are stored in the directories:

The post processing output log files are :

In these directories, you find the job output files: rebuild, pack*, ts, se, atlas, monitoring .

Note : The scripts to transfer data on dods are run at the end of the monitoring job or at the end of each atlas job.